“Assistive AI vs Generative AI” and the curse of common sense

“It isn’t generative AI; it’s assistive AI.”

“It’s all about assistive AI vs. generative AI.”

“It’s simple: Assistive AI is good. Generative AI is bad.”

I’ve been seeing it a lot lately. Maybe you have, too. The first time I saw it, I just shrugged. It was incorrect, but not importantly incorrect. The tenth time I saw it, it put the hair up on the back of my neck.

It’s not just that the distinction is meaningless. It’s that people are clearly quoting something they’ve read. Something incorrect. And that usually means that someone, somewhere, has written a viral post.

Unpacking several layers of misinformation

Before we get into this, let’s be clear: This isn’t about “defending AI.”

This is about ensuring that the words we say mean things. It’s about understanding the technology that we are using, building, and (hopefully) regulating.

And it’s about a culture that has started to prize intuition and common sense over research and education.

Because this distinction sounds right. It sounds intuitively correct — of course, it’s fine to “assist.” Assisting is different from creating.

The problem is that this classification system is inherently meaningless.

Generative AI is a type of technology. It’s the same technology used in medical science. It’s the same technology used in data science and environmental research.

However, this term has been hijacked by people who are not technologists to imply creative generation. Today, it is “common sense” that generative AI is an AI system that generates creative works.

And this isn’t true.

So, that’s the first misunderstanding.

The second misunderstanding is conflating a tool with its use.

A hammer can be used on nails. It can also be used on skulls.

A hammer is a tool. Murder is a use case.

Assistive AI is a use of technology. It is also often built on machine learning and, yes, generative AI.

Saying that you don’t support generative AI but you do support assistive AI is like saying you don’t support hammers, but you do support hammering nails. While a hammer isn’t the only way to hammer nails, it is the most common one.

And while you can crack open a skull with a hammer, that isn’t the primary use case of a hammer, either.

Let’s dig a little deeper

Many “assistive AI” technologies rely on generative AI as their underlying technology.

Automatic captioning and transcription, summarizations, navigation systems. Again, that isn’t to say that all assistive AI technologies use generative AI. What is important is that these things are not mutually exclusive.

On a technological level, the confusion is two-fold:

1) People are confusing “generative AI” with “creative generation.”

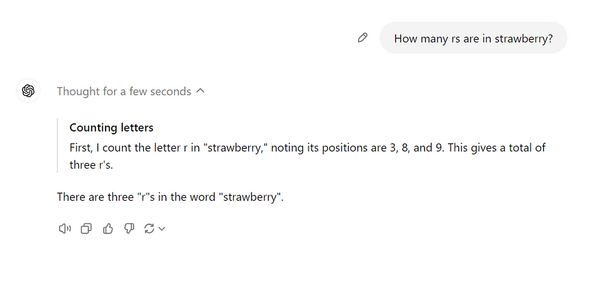

2) The AI itself is now regurgitating this information, hilariously calling attention to a broad fault in LLMs.

And I think #2 is quite interesting. Because now, if you ask the AI itself what “generative AI” is or for “generative AI vs. assistive AI,” it will actually give you the wrong answers based on a handful of blog posts.

At this point, you might say: Well, so the definitions have changed. And then you’ll call me a cuck or something, because we are online and people are oddly passionate lately.

Well, no. Because it’s still not a useful way to look at the world. There is no true delineation between an “assistive AI” and a “generative AI”. Only an intuitive one.

An assistive AI system that corrects grammar, drafts email replies, and summarizes your meetings — that is very likely to be generative AI. If you are using Microsoft or Google, it is certainly generative AI.

In other words, for nearly all practical purposes, people are using “assistive AI” to refer to a subset of “generative AI.”

Digression: OK, wait, what is generative AI?

At this point, you might be exasperated; OK, if everyone is using “generative AI” to mean creative generation, why not just go with it?

Well, because that leaves us with no room to describe 90% of generative AI technologies.

Consider DolphinGemma, a generative AI LLM that simply lets us talk to dolphins, or MedGemma, a generative AI LLM that helps read X-rays.

If we allow this language to be hijacked, we will have no way to discuss these uses of generative AI.

And while we’re at it…

This debate is also kind of co-opting the term “assistive.” Assistive technology usually refers to technologies for people with disabilities, such as screen readers.

But people are using “assistive AI” to mean things like “summarizes meetings so I don’t need to attend them” or “automates Jira because everyone hates Jira.”

It’s a way to ethically launder a utility under a term that is already known to be morally pure.

Have your cake, eat it too

Here’s the reality: people are slotting AI into buckets to feel better.

There’s now the AI “I really want to use and feel good using” and the AI “I don’t want to use and feel other people should feel bad about using.”

From the start, this was the very real and present danger of attaching moralism to a technology.

And don’t get me wrong. There is a very real distinction between AI systems that generate creative works and other forms of AI/ML and generative AI.

But that distinction isn’t “generative AI.” We can call it something else, anything else — plagiarism machine, stochastic parrot, genAI (derogatory), whatever you want.

But we do need to make the distinction.

One major issue is that this is driving needless conflict. A continued confusion between AI, ML, LLM, and GenAI means that no one knows whether they are doing the right or wrong thing — and they’re constantly accusing each other of doing the wrong thing.

But a broader issue is that not understanding this technology renders opinions meaningless on a grand scale. Companies won’t regulate themselves if we don’t understand what needs to be regulated; they won’t follow ethical guidelines if we don’t understand what we want.